-

5 min. read

5 min. read

-

Jessica Bonacci

Jessica Bonacci Content Writer

Content Writer

- Jessica is a Google Analytics certified Digital Video Analyst at WebFX. She has created over 100 videos for the WebFX YouTube channel (youtube.com/webfx) in the last two years. Jessica specializes in video marketing and also loves content marketing, SEO, social media marketing, and many other aspects of digital marketing. When she’s not creating videos, Jessica enjoys listening to music, reading, writing, and watching movies.

What is crawlability and indexability? Watch this video with Will from the Internet Marketing team to find out!

Transcript: Google’s search engine results pages (SERPs) may seem like magic, but when you look more closely, you see that sites show up in the search results because of crawling and indexing. This means for your website to show up in the search results, it needs to be crawlable and indexable.

Search engines have these bots we like to call crawlers. They basically find websites on the internet, crawl their content, follow any links on the site, and then create an index of the sites they’ve crawled.

The index is this huge database of URLs that a search engine like Google puts through its algorithm to rank.

You see the results of the crawling and indexing when you search for something and the results pages load. It’s all the sites a search engine has crawled and has deemed relevant to your search based on a bunch of different factors. I won’t touch on the algorithm that Google and other search engines use to figure out what content is relevant to a search, but you can check out our website to learn more.

What is crawlability and indexability?

Crawlability means that search engine crawlers can read and follow links in your site’s content. You can think of these crawlers like spiders following tons of links across the web. Indexability means that you allow search engines to show your site’s pages in the search results.

If your site is crawlable and indexable, that’s excellent!

If it’s not, you can be losing out on a lot of potential traffic from Google’s search results. And this lost traffic translates to lost leads and lost revenue for your business.

How do you know if your site is indexed?

It’s easy. Go to Google or another search engine and type in site:…

and then your site’s address. You should see the results for how many pages on your site have been indexed.  If you don’t see anything, don’t worry, I’ll tell you how to fix it and get your site submitted to Google!

If you don’t see anything, don’t worry, I’ll tell you how to fix it and get your site submitted to Google!

How do you get your site pages crawled and indexed?

Internal linking

You want crawlers to get to every page on your site, right?

Then make sure every page on your site has a link pointing to it.

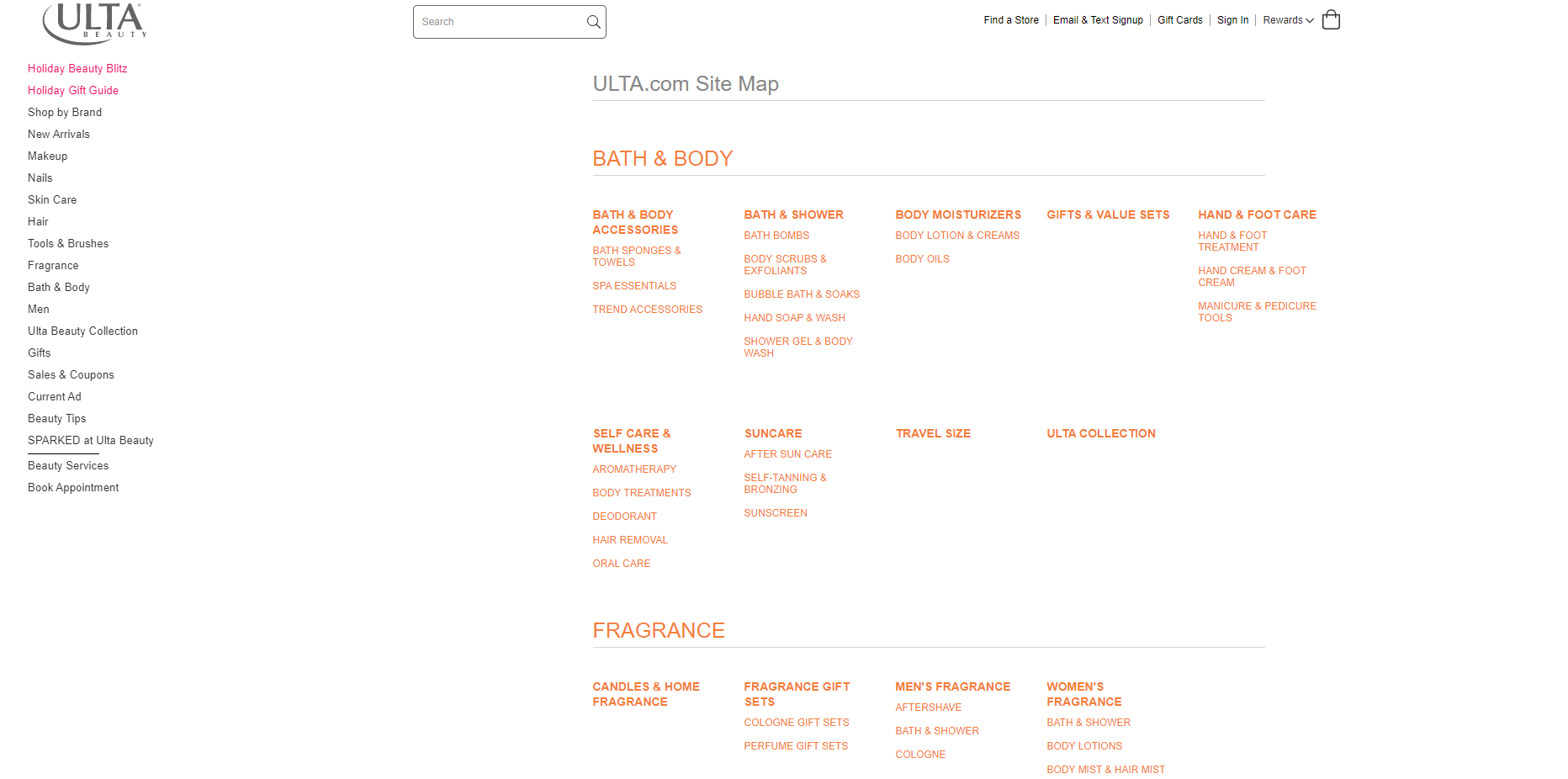

So, looking at Target as an example, you can easily follow the links in their navigation to get from page to page. If you click on women’s clothing, you can see even more links to different types of clothing, and then links to even more specific types of clothing within that menu. There are links leading to every page, which a crawler will follow.

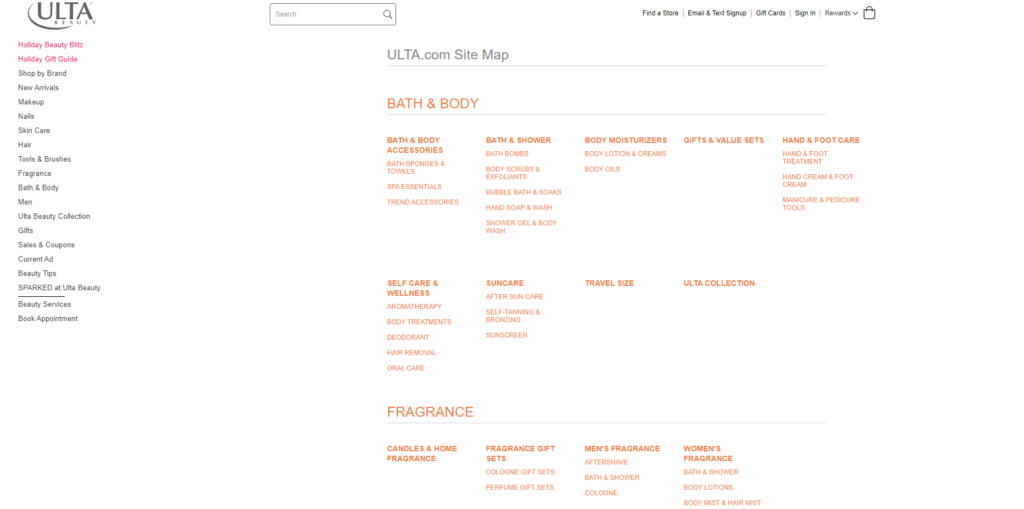

If you don’t have a lot of internal links, HTML sitemaps can give crawlers links to follow on your site.

HTML sitemaps are for people and search engines, and they list links to every page on your site.

You can usually find them in the footer of a site.

But best practice is to include links to every page throughout relevant content and in navigational tabs on your site.

Backlinks

Again, links matter for your site. But backlinks are much harder to get than internal links because they come from someone outside of your business.

Your site gets a backlink when another site includes a link to one of your pages. So when crawlers are going through that external site, they’ll reach your site through that link as long as they’re allowed to follow it.

The same happens for other websites if you link to them in your content.

Backlinks are tricky to get, but check out our link building video to learn how you can earn them for your business.

Looking for an all-in-one SEO audit tool? You’ve found it.

SEO Checker provides data on key metrics to give you:

- Complete SEO score

- Content Grade

- Site Speed Analysis

- and more.

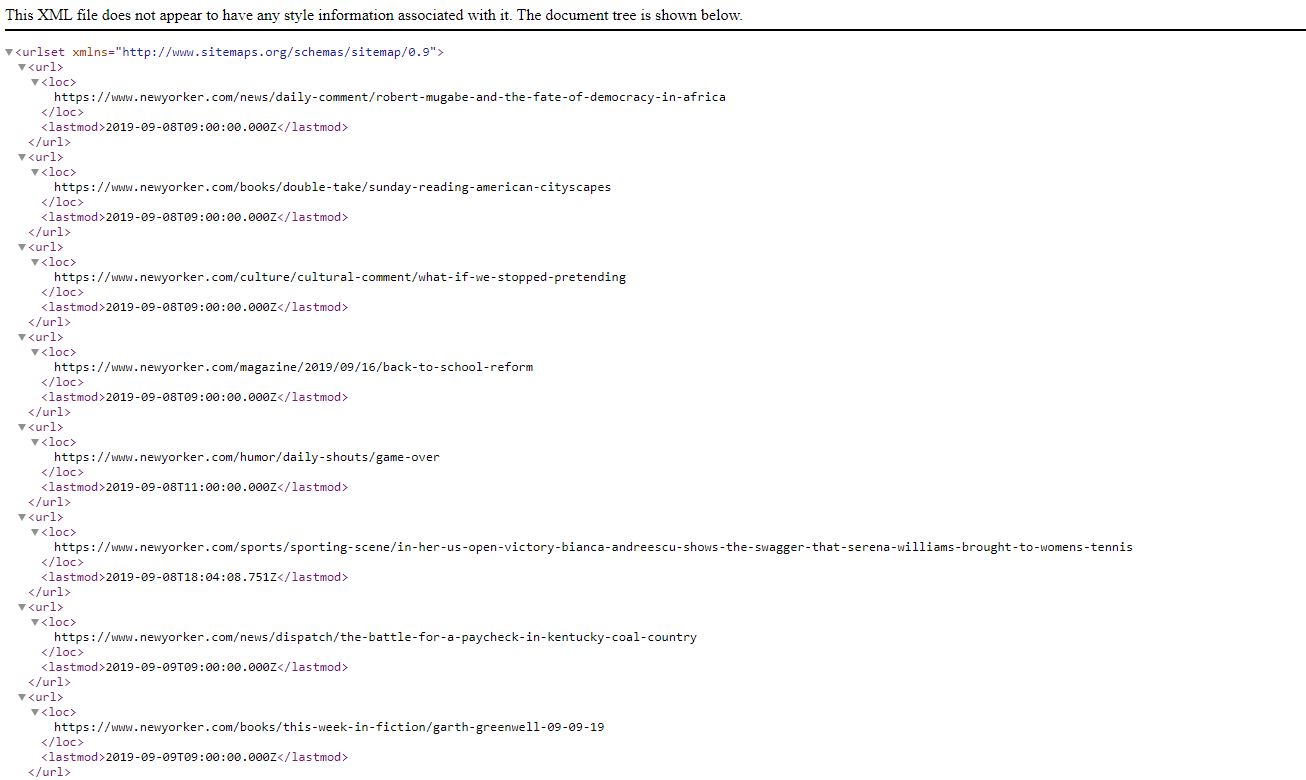

XML sitemaps

It’s good practice to submit an XML sitemap of your site to Google Search Console. Check out our video on XML sitemaps to learn all about them.

But not right now. It’s my time to shine. Here’s a short summary.

XML sitemaps should contain all of your page URLs so crawlers know what you want them to crawl. They’re different from HTML sitemaps because they’re just for crawlers.

You can create an XML sitemap on your own, use an XML sitemap tool, or even use a plugin if it’s compatible with your site’s CMS. But don’t include links you don’t want crawled and indexed in your sitemap. This can be something like a landing page for a really targeted email campaign.

Robots.txt

This one’s a little more technical.

A robots.txt file is a file on the backend of your site that tells crawlers what they can’t crawl and index on your site. If you’re familiar with robots.txt, make sure you’re not accidentally blocking a crawler from doing its job.

If you’re blocking a crawler, it will look something like this. The term user-agent refers to the bot crawling your site. So, for example, Google’s crawler is called Googlebot and Bing’s is Bingbot.

If you’re not sure how to identify problems or make changes to your robots.txt file, partner with an expert to avoid breaking your website.

Well, that’s all I have for you on what is crawlability and indexability! If you want to work on improving your site’s SEO strategy, don’t hesitate to contact us! Also, check out our blog for even more internet marketing knowledge! See you later!

-

Jessica is a Google Analytics certified Digital Video Analyst at WebFX. She has created over 100 videos for the WebFX YouTube channel (youtube.com/webfx) in the last two years. Jessica specializes in video marketing and also loves content marketing, SEO, social media marketing, and many other aspects of digital marketing. When she’s not creating videos, Jessica enjoys listening to music, reading, writing, and watching movies.

Jessica is a Google Analytics certified Digital Video Analyst at WebFX. She has created over 100 videos for the WebFX YouTube channel (youtube.com/webfx) in the last two years. Jessica specializes in video marketing and also loves content marketing, SEO, social media marketing, and many other aspects of digital marketing. When she’s not creating videos, Jessica enjoys listening to music, reading, writing, and watching movies. -

WebFX is a full-service marketing agency with 1,100+ client reviews and a 4.9-star rating on Clutch! Find out how our expert team and revenue-accelerating tech can drive results for you! Learn more

Try our free SEO Checker

Boost your site’s search performance with our free SEO Checker. Analyze your website for optimization tips on titles, headers, content, speed, and more. Get a free report now to enhance rankings on Google, Bing, Yahoo, and beyond!

How Is Your Website’s SEO?

Use our free tool to get your score calculated in under 60 seconds.

Try our free SEO Checker

Boost your site’s search performance with our free SEO Checker. Analyze your website for optimization tips on titles, headers, content, speed, and more. Get a free report now to enhance rankings on Google, Bing, Yahoo, and beyond!