-

Published: Feb 13, 2026

Published: Feb 13, 2026

-

14 min. read

14 min. read

-

Summarize in ChatGPT

-

Thaakirah Abrahams

Thaakirah Abrahams WebFX Editor

WebFX Editor

- Thaakirah Abrahams is a Marketing Editor at WebFX, where she leverages her years of experience to craft compelling website content. With a background in Journalism and Media studies and certifications in inbound marketing, she holds a keen eye for detail and a talent for breaking down complex topics for numerous sectors, including the legal and finance industries. When she’s not writing or optimizing content, Thaakirah enjoys the simple pleasures of reading in her garden and spending quality time with her family during game nights.

Table of Contents

- OpenAI’s GPT-5.3-Codex signals the “AI teammate” era for marketing workflows

- The AI teammate era means AI workflow automation at the team level

- What a delegable workflow needs

- 4-step marketing workflow decomposition guide

- 5 workflows that are perfect AI teammate pilots

- Where most teams get it wrong

- Agent-ready workflow criteria

- Want help building an AI teammate for your marketing team?

-

What is AI workflow design?

AI workflow design involves building repeatable marketing processes that an AI ‘teammate’ can execute from start to finish with clear inputs, explicit rules, defined output formats, and human quality checkpoints, moving beyond single-task AI assistance to full process automation. -

How does OpenAI’s GPT-5.3-Codex change marketing automation?

GPT-5.3-Codex is designed for long-running tasks involving planning, tool use, and execution across multiple steps, allowing teams to delegate complete workflows rather than individual tasks while maintaining human oversight through progress updates and steering capabilities. -

What are the four essential components of a delegable workflow?

A delegable workflow requires clear inputs (reliable data access), explicit rules (written standards and thresholds), defined output formats (template-able deliverables), and human QA checkpoints (specific approval moments before publishing or spending). -

What workflows are ideal for AI teammate pilots?

Perfect candidates include weekly reporting narratives with anomaly detection, competitive intelligence digests, campaign QA workflows for UTM verification, content refresh queues, and sales enablement asset repurposing, all of which have stable output formats and obvious QA gates. -

What common mistakes break AI workflow delegation?

Teams fail when they have missing rules (causing inconsistent outputs), unclear ownership (no accountability for quality), no QA gates (allowing hallucinations and errors), messy inputs (incomplete tracking or scattered data), and poorly defined tool access (creating security risks or unusability).

If AI is still just a drafting tool on your team, you’re leaving the biggest gains untouched. The shift now is AI workflow design. This involves building repeatable marketing workflows that an AI “teammate” can run from start to finish with clear inputs, rules, and quality gates.

OpenAI’s GPT-5.3-Codex is the perfect example of this because it’s designed for longer projects that involve multiple steps, real tools, and checkpoints that let you steer it in the right direction. That raises the ceiling on what you can delegate, but only if your workflows are structured enough to run reliably.

In this guide, you’ll learn how to automate workflows with AI, recognize when a workflow is ready, and avoid the mistakes that cause agentic automation to fall apart.

- OpenAI’s GPT-5.3-Codex signals the “AI teammate” era for marketing workflows

- The AI teammate era means AI workflow automation at the team level

- What a delegable workflow needs

- 4-step marketing workflow decomposition guide

- 5 workflows that are perfect AI teammate pilots

- Where most teams get it wrong

- Agent-ready workflow criteria

OpenAI’s GPT-5.3-Codex signals the “AI teammate” era for marketing workflows

What is OpenAI’s GPT-5.3-Codex?

OpenAI’s GPT-5.3-Codex is a more agentic model built to handle longer, multi-step work with tool use and ongoing supervision, not just one-off outputs. OpenAI positions it as capable of producing real “knowledge work” deliverables, including computer-based tasks across apps. For marketers, it’s a signal that AI can start owning repeatable workflows end-to-end — but only if you design the workflow with clear inputs, rules, and human checkpoints.

OpenAI recently introduced GPT-5.3-Codex, a model designed to take on long-running tasks involving planning, tool use, and execution — not just single-step assistance. OpenAI says it performs better at tasks that look like real, day-to-day work outputs and structured deliverables, not just writing text.

Here’s what that means for marketing managers designing delegable workflows:

- It can handle full workflows, not just one-off tasks. You can delegate repeatable processes from start to finish, as long as the steps are clear and consistent.

- You’re meant to guide it as it works. The safest setup includes a few points where a human can review, approve, or stop the run.

- It can work across the tools you already use. That’s why it helps to define clean handoffs between analytics, ads, your CRM, docs, and spreadsheets.

- It’s a good fit for recurring deliverables. Weekly and monthly reports, QA checks, and competitive digests are easier to delegate because the format stays the same.

- It’s faster, so iteration is easier. When the model works quicker, it’s more realistic to run a “draft → review → fix → rerun” loop without wasting time.

- Basic access rules still matter. Limit what the AI can touch, decide when it needs approval, and set clear “pause and ask” moments for anything sensitive.

If the model can execute across tools, your advantage comes from designing workflows that it can run reliably.

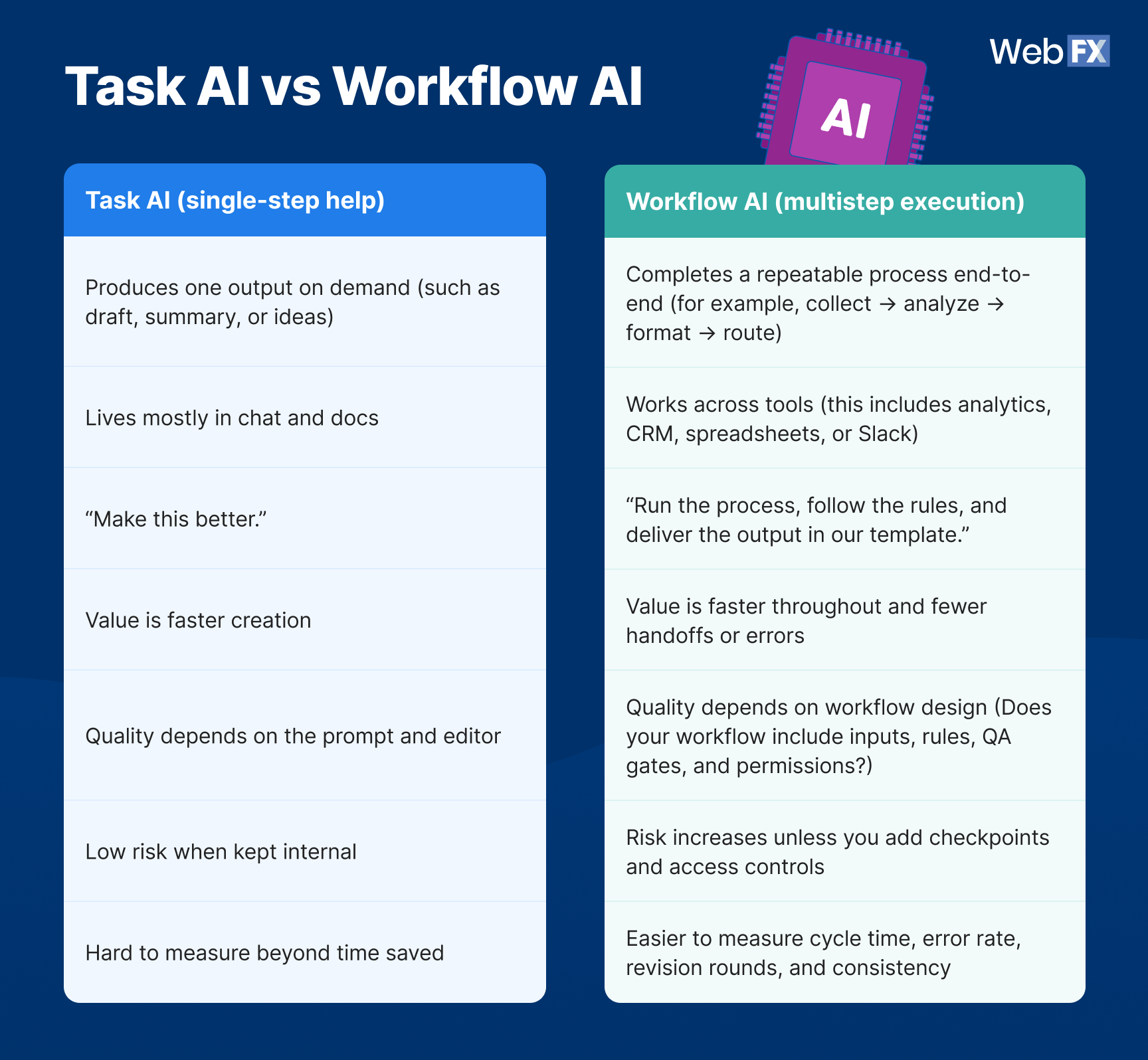

The AI teammate era means AI workflow automation at the team level

Most teams already use AI for tasks like drafting a post, rewriting a paragraph, summarizing a call, and brainstorming subject lines. That’s helpful, but it doesn’t change how work moves through your team.

Marketing workflow automation does. Instead of speeding up one step, it enhances speed and consistency across the whole process — the tool switching, manual checks, copy-paste handoffs, and “did anyone QA this?” moments that slow launches and create rework.

Here’s the difference:

Table view

| Task AI (single-step help) | Workflow AI (multistep execution) |

| Produces one output on demand (such as draft, summary, or ideas) | Completes a repeatable process end-to-end (for example, collect → analyze → format → route) |

| Lives mostly in chat and docs | Works across tools (this includes analytics, CRM, spreadsheets, or Slack) |

| “Make this better.” | “Run the process, follow the rules, and deliver the output in our template.” |

| Value is faster creation | Value is faster throughput and fewer handoffs or errors |

| Quality depends on the prompt and editor | Quality depends on workflow design (Does your workflow include inputs, rules, QA gates, and permissions?) |

| Low risk when kept internal | Risk increases unless you add checkpoints and access controls |

| Hard to measure beyond time saved | Easier to measure cycle time, error rate, revision rounds, and consistency |

This shift matters because OpenAI isn’t positioning GPT-5.3-Codex as a “wait for the final answer” model. It’s positioned as something you direct and supervise while it works, with progress updates and the ability to steer mid-run.

That interaction model maps directly to how workflow AI should operate in marketing: with clear checkpoints where a human can approve, correct, or halt the run.

Use this framework to see where you are in your AI marketing journey and what to build toward:

- Task help: AI supports a single step, such as drafting a report summary.

- Workflow delegation: AI executes a repeatable process across tools. For example, pull metrics → compare periods → flag anomalies → draft narrative → route for approval.

- Repeatable systems: The workflow runs on a schedule with templates, rules, permissions, and QA gates.

As agents become more capable, the advantage shifts to the teams that can design workflows humans can steer and supervise reliably, instead of relying on one-off prompts.

What a delegable workflow needs

If you want to know how to build an AI workflow your team can trust, start here. Delegation works when four ingredients are true at the same time:

- Clear inputs: The agent can reliably access the data it needs, such as where it lives, what time frame to use, and what fields matter.

- Explicit rules: Your team has written down what “good” looks like, plus thresholds and edge cases. For example, what to do when numbers spike, what sources are allowed, or what claims need citations.

- Defined output format: The deliverable is template-able. This could include a report structure, a QA pass/fail table, or a standardized brief.

- Human QA checkpoints: Specific moments where a marketer approves, corrects, or halts execution, especially before publish, spend changes, or outbound comms.

If any of these are missing, the agent doesn’t become a teammate. It becomes a faster way to create rework.

4-step marketing workflow decomposition guide

Here’s how to create an AI marketing workflow that can actually run. Use this guide if you want to convert a messy, multi-tool process into an agent-ready brief.

Think of it as translating “how we do this” into a system that an agent can execute with guardrails in place.

- Map a recurring workflow

- Identify the tools needed

- Document the rules and process

- Draft the agent brief

1. Map a recurring workflow

Pick one workflow your team repeats weekly, biweekly, and monthly. Then map it end-to-end:

- Who starts it and what triggers it? Is it a date, a Slack request, or a client meeting?

- What tools does it touch?

- What decisions happen along the way?

- Where do approvals happen?

As you map, highlight handoffs and bottlenecks. Those are usually where delegation pays off.

For example, a weekly PPC performance recap often includes these tasks: pull numbers from ad platforms → compare to last week → explain swings → propose next actions → format into a doc/Slack update → send for review.

2. Identify the tools needed

List every app the workflow uses, whether it includes analytics, CRMs, ad platforms, PM tools, spreadsheets, docs, or Slack. Define what kind of access the workflow requires in each tool, because that determines permissions and where you add review gates.

A simple way to do that is to label each interaction as one of the following:

- Read actions: The agent only needs to retrieve information. Maybe it pulls metrics, checks statuses, reviews logs, or grabs screenshots.

- Write actions: The agent needs to create or update something, such as drafting a report, updating a Google Sheet, opening a Jira/Asana ticket, or posting a draft message in Slack.

- Sensitive actions: The agent would touch high-risk areas. Either sensitive data like customer details or credentials, or high-impact actions like budget changes, sending emails, or publishing pages. These should default to restricted access and require a human approval step.

For example, if your reporting workflow requires GA4, Search Console, and CRM attribution, you need clear access rules and a consistent schema for what gets pulled, when, and how it’s labeled.

That’s also why AI and GPT integration matters for many teams before they build a fully custom agent. If you need help ensuring secure connections and reliable data handoffs, WebFX offers AI and GPT integration services to connect AI to marketing processes and tools, so you can streamline work without relying on disconnected outputs.

3. Document the rules and process

Now spell out the standards your team relies on to keep output consistent:

- What metrics matter most, and what thresholds trigger action?

- What explanations are acceptable, and what is speculation?

- What sources are allowed for insights and benchmarks?

- When should the agent stop and ask for approval?

You should also document escalation and stop conditions. If the agent can’t access a tool, sees missing data, or falls below a confidence threshold, it should route to a human — not guess.

OpenAI’s rollout highlights that as agents become more capable, they also require stronger supervision and access controls, including monitoring, trusted access, and fallback behavior. So marketing workflow delegation needs the same basics: scoped permissions, escalation paths, and clear stop conditions.

4. Draft the agent brief

This is where you reframe steps into an outcome-driven brief that powers your AI marketing automation. Keep it tight and operational. Here’s an AI agent brief template you can use:

AI Agent brief template:

1. Executive summary

- Name of Agent: For example, Weekly Performance Narrator Agent.

- Objective: Write a one-sentence outcome, for example, “Produce a weekly marketing performance narrative with anomalies flagged and next actions recommended.”

- Key Value: Time saved, fewer errors, and faster turnaround. For example, “Reduce reporting time by 60% and cut revision rounds in half.”

2. Workflow mapping

| Current Manual Process | Automated Agent Workflow |

| Trigger: What starts the workflow (weekly cadence, Slack request, client meeting, or a KPI alert)? | Trigger: What starts the agent run (schedule, request, alert, or new data available)? |

| Owner(s): Who is responsible for each part (data pull, analysis, drafting, review)? | Owner(s): Who supervises the run and approves checkpoints (reviewer or final approver)? |

| Inputs: What data is pulled and from where (sources, time frames, and required fields). | Inputs: What data the agent must pull and the exact sources or time frames to use. |

| Tool path: Which tools get opened and in what order (GA4 → Ads → CRM → Sheets → Docs → Slack). | Tool actions: What the agent does inside each tool (retrieve data, update a sheet, draft a doc, create a ticket, or post a draft message). |

| Process steps: The sequence humans follow to get from inputs to output (compile → compare → investigate → write → format → share). | Process steps: The sequence the agent must follow (pull → validate → compare → flag anomalies → draft output → route for review). |

| Decision rules: The judgment calls humans make (thresholds, exclusions, what counts as an issue, or what needs escalation). | Rules applied: The logic the agent must use (thresholds, allowed sources, exclusions, or formatting rules). |

| Quality checks: What humans verify before sharing (accuracy, brand voice, compliance, and link/UTM checks). | Quality gates: Where the agent must pause for human review and what the reviewer approves (draft, recommended changes, and final version). |

| Approval points: Where signoff happens (before publishing, sending, budget changes, or client-facing messaging). | Escalation and stop rules: What the agent must do when data is missing, conflicting, or confidence is low (notify X, stop and ask for input, and never guess). |

| Final output: What “done” looks like and where it’s delivered (doc, slide, dashboard note, Slack update, or ticket). | Final output: Exactly what it delivers, in what format, and where it goes (template doc link, Slack message, ticket, or updated sheet). |

3. Agent capabilities and tools

- Data Inputs: Tools like GA4, Search Console, CRM, ad platforms, spreadsheets, or call transcripts.

- Systems to Integrate (APIs/Access): Google, Meta, HubSpot/Salesforce, Asana/Jira, Slack, or Looker Studio.

- Tools to Use: Browser/computer use, spreadsheet editor, doc builder, data connector, or screenshotter.

- Access Levels:

| Access level | What it means in practice |

| Read access | The agent can view or pull information, such as metrics, statuses, logs, pages, or reports. |

| Write access | The agent can draft or update work outputs like reports, sheets, tickets, or draft Slack messages. |

| Restricted access | High-risk actions or data require approval or are blocked by default. This often relates to budget changes, publishing, sending emails, customer data, credentials, and deleting/editing live assets. |

4. Persona and tone (optional for LLM context)

- Role: For example, “You are a senior marketing operations analyst.”

- Tone: Concise, practical, skeptical of weak claims, or cite sources when needed.

- Output Style Rules: Use bullets for findings, include numbers, avoid hype, and label assumptions.

5. Rules, guardrails, and security

- Quality Standards: Accuracy checks, citation rules, formatting requirements, and brand constraints.

- Approval Gates: Where it must pause for human review.

- Escalation Path: If there is missing data, low confidence, or conflicting signals, who should it notify and how.

- Stop Conditions: When to halt instead of guessing. For example, “If tracking data is incomplete, stop and request confirmation.”

- Data Handling: PII handling, what can or can’t be stored, or retention limits.

- Permissions: Read-only defaults, least-privilege access, or credential handling.

6. Success metrics (KPIs)

Pick 3–6 metrics that match the workflow:

- Time-to-completion: For example, less than 30 minutes from trigger to draft output.

- Revision rounds: For example, 1 round on average.

- Accuracy/error rate: For example, 98% metric accuracy and zero missing required fields.

- Consistency: For example, 100% adherence to the template and required sections.

- Business impact (optional): For example, faster launch cycles, fewer QA issues, and improved reporting adoption.

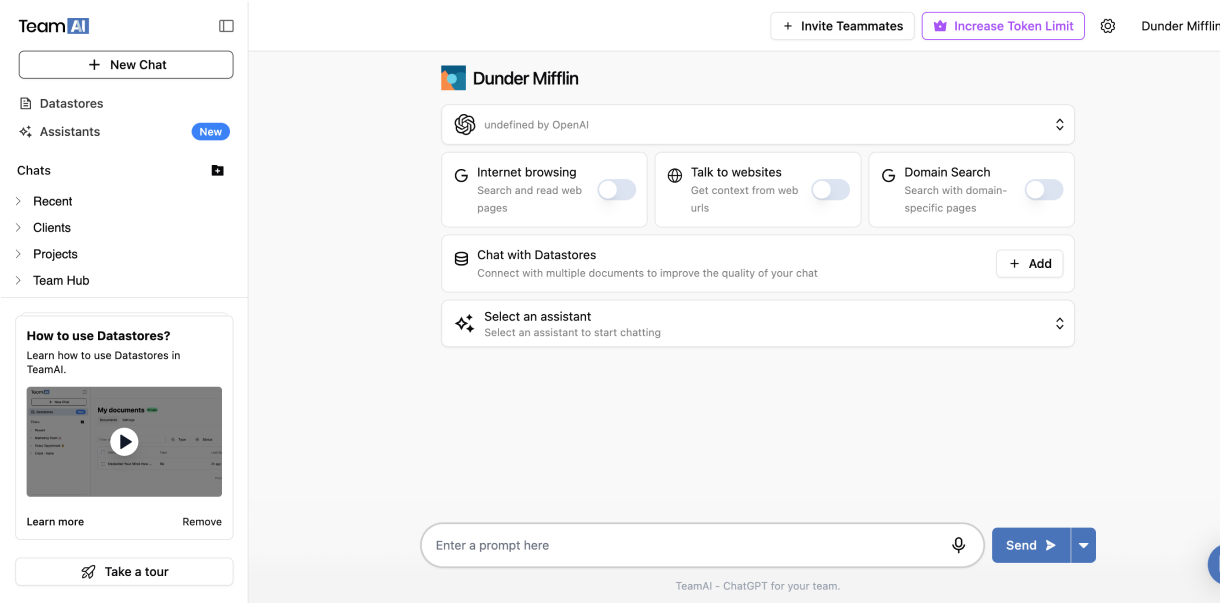

If you can hand that brief to a new hire and they’d understand what “done” looks like, you’re close to agent-ready. TeamAI can help you turn an agent brief into a working workflow with approvals and guardrails, without the extra setup time.

AI Workspace for Business Teams

Imagine an AI workspace where you can collaborate with team members, share prompts, and leverage personas for generative AI.

Empower My Team with AI

5 workflows that are perfect AI teammate pilots

If you’re wondering which workflow you should automate, here are practical candidates for marketing workflow automation that are repeatable, structured, and measurable.

- Weekly reporting narratives and anomaly notes: An AI agent for this workflow would be able to pull metrics, compare periods, flag spikes or drops, draft insights in your template, and pause for approval.

- Competitive intel digest: The AI can check a defined set of competitor pages, ads, or keywords, log changes, summarize trends, and produce a consistent brief.

- Campaign QA workflow: The AI teammate verifies UTMs, tracking, landing page requirements, link checks, naming conventions, and stops at a launch gate.

- Content refresh queue: This AI agent would identify pages to update, suggest improvements, draft updates with sourcing notes, and route for editorial review.

- Sales enablement repurposing: The AI converts one approved asset, such as a case study or webinar, into a set of channel outputs with brand constraints and review steps.

If you’re trying to decide what to delegate, start with workflows where the output format is stable and the QA gate is obvious.

Where most teams get it wrong

AI can absolutely streamline operations, but it follows your process exactly. If the workflow is unclear or inconsistent, automation tends to create even faster confusion rather than faster results. Common breakdowns look like this:

- Missing rules: The agent can’t infer your standards, so you get inconsistent outputs.

- Unclear ownership: Nobody is accountable for quality, approvals, and updates to the workflow brief.

- No QA gates: Nobody outlined constraints regarding hallucinations, wrong data pulls, brand or compliance issues, and “almost right” work that still requires heavy rework.

- Messy inputs: The AI doesn’t complete tasks effectively when you feed it incomplete tracking, inconsistent naming, scattered docs, or conflicting dashboards.

- Poorly defined tool access: Too much permission creates risk. Too little access makes the workflow unusable.

A lightweight safeguard model helps:

- Put human-in-the-loop checkpoints at publish or spend moments.

- Version your agent brief and prompts.

- Define “stop” conditions so the agent asks instead of guessing.

Even OpenAI positions steerability and supervision as essential to effective agent work. So missing QA gates isn’t a minor flaw. It breaks delegation.

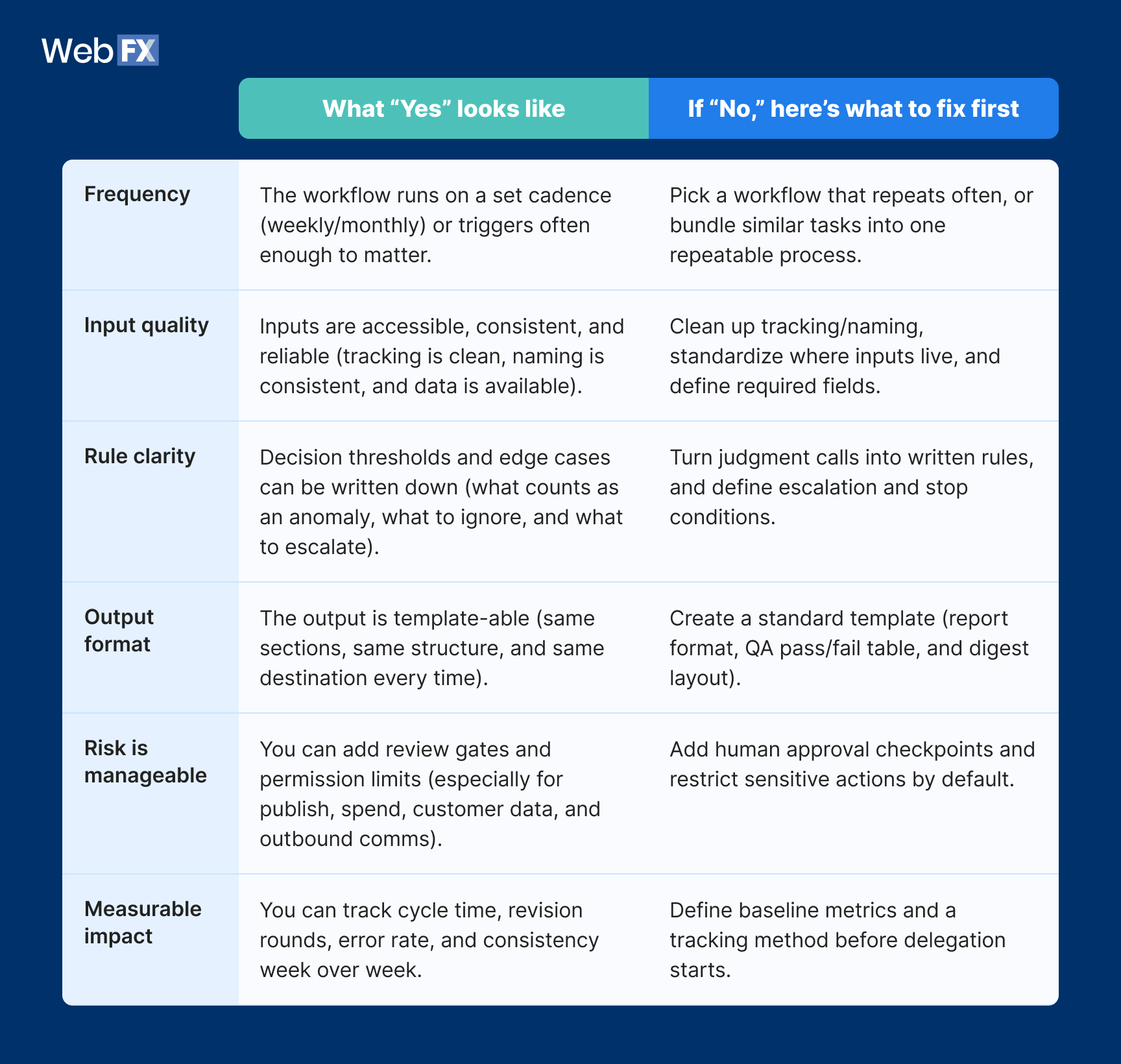

Agent-ready workflow criteria

Use this quick rubric to judge whether a workflow is ready for delegation as part of your AI workflow design process.

A workflow is a good candidate if you can answer “yes” to most of these:

Table view

| Criterion | What “Yes” looks like | If “No,” here’s what to fix first |

|---|---|---|

| Frequency | The workflow runs on a set cadence (weekly/monthly) or triggers often enough to matter. | Pick a workflow that repeats often, or bundle similar tasks into one repeatable process. |

| Input quality | Inputs are accessible, consistent, and reliable (tracking is clean, naming is consistent, and data is available). | Clean up tracking/naming, standardize where inputs live, and define required fields. |

| Rule clarity | Decision thresholds and edge cases can be written down (what counts as an anomaly, what to ignore, and what to escalate). | Turn judgment calls into written rules, and define escalation and stop conditions. |

| Output format | The output is template-able (same sections, same structure, and same destination every time). | Create a standard template (report format, QA pass/fail table, and digest layout). |

| Risk is manageable | You can add review gates and permission limits (especially for publish, spend, customer data, and outbound comms). | Add human approval checkpoints and restrict sensitive actions by default. |

| Measurable impact | You can track cycle time, revision rounds, error rate, and consistency week over week. | Define baseline metrics and a tracking method before delegation starts. |

The overall verdict:

- 5–6 “Yes” answers: You’re in a good place. This workflow is ready to delegate — just keep the review gates in place.

- 3–4 “Yes” answers: Close. Start with a smaller version (read-only + draft output), then expand once your inputs and rules are clearer.

- 0–2 “Yes” answers: Not yet. Use the “what to fix” column as your cleanup list, then rerun the rubric.

Want help building an AI teammate for your marketing team?

Agentic AI changes what’s possible, but results still come down to your AI workflow design. You need clear inputs, explicit rules, defined outputs, and quality gates.

If you want to move from experimentation to a real delegable workflow, WebFX’s AI agent development and AI consulting services are designed to help you scope the right use case, build an agent around your process, integrate it into your tech stack, and maintain guardrails like permissions and escalation paths. Learn more about our AI agent development and AI consulting services today.

-

Thaakirah Abrahams is a Marketing Editor at WebFX, where she leverages her years of experience to craft compelling website content. With a background in Journalism and Media studies and certifications in inbound marketing, she holds a keen eye for detail and a talent for breaking down complex topics for numerous sectors, including the legal and finance industries. When she’s not writing or optimizing content, Thaakirah enjoys the simple pleasures of reading in her garden and spending quality time with her family during game nights.

Thaakirah Abrahams is a Marketing Editor at WebFX, where she leverages her years of experience to craft compelling website content. With a background in Journalism and Media studies and certifications in inbound marketing, she holds a keen eye for detail and a talent for breaking down complex topics for numerous sectors, including the legal and finance industries. When she’s not writing or optimizing content, Thaakirah enjoys the simple pleasures of reading in her garden and spending quality time with her family during game nights. -

WebFX is a full-service marketing agency with 1,100+ client reviews and a 4.9-star rating on Clutch! Find out how our expert team and revenue-accelerating tech can drive results for you! Learn more

Try our free Marketing Calculator

Craft a tailored online marketing strategy! Utilize our free Internet marketing calculator for a custom plan based on your location, reach, timeframe, and budget.

Plan Your Marketing Budget

Table of Contents

- OpenAI’s GPT-5.3-Codex signals the “AI teammate” era for marketing workflows

- The AI teammate era means AI workflow automation at the team level

- What a delegable workflow needs

- 4-step marketing workflow decomposition guide

- 5 workflows that are perfect AI teammate pilots

- Where most teams get it wrong

- Agent-ready workflow criteria

- Want help building an AI teammate for your marketing team?

See AI Marketing in Action

Explore how WebFX helped a regional brand get discovered in AI search experiences — proving what’s possible with today’s AI-driven marketing!

Proven Marketing Strategies

Try our free Marketing Calculator

Craft a tailored online marketing strategy! Utilize our free Internet marketing calculator for a custom plan based on your location, reach, timeframe, and budget.

Plan Your Marketing Budget

What to read next