-

14 min. read

14 min. read

-

Trevin Shirey

Trevin Shirey VP of Marketing

VP of Marketing

- Trevin serves as the VP of Marketing at WebFX. He has worked on over 450 marketing campaigns and has been building websites for over 25 years. His work has been featured by Search Engine Land, USA Today, Fast Company and Inc.

Heard of Google’s new update, BERT? You probably have if you’re big on search engine optimization (SEO). The hype over Google BERT in the SEO world is justified because BERT is making search more about the semantics or meaning behind the words rather than the words themselves.

In other words, search intent is more significant than ever. Google’s recent update of BERT affecting the SEO world impacts 1 in 10 search queries, and Google projects that this will increase over more languages and locales over time. Because of the huge impact BERT will have on searches, having quality content is more important than ever.

So that your content can perform its best for BERT (and for search intent), in this article, we’ll go over how BERT works with searches and how you can use BERT to bring more traffic to your site. Want to talk with an SEO expert? Connect with WebFX!

Our digital marketing campaigns impact the metrics that improve your bottom line.

See More Results

WebFX has driven the following results for clients:

$6 billion

In client revenue

24 million

Leads for our clients

7.14 million

Client phone calls

What is BERT?

BERT stands for Bidirectional Encoder Representations from Transformers.

Now that’s a term loaded with some very technical machine learning jargon! What it means:

- Bidirectional: BERT encodes sentences in both directions simultaneously

- Encoder representations: BERT translates the sentences into representations of word meaning it can understand

- Transformers: Allows BERT to encode every word in the sentence with a relative position since the context in large part depends on word order (which is a more efficient method than remembering exactly how the sentences were entered into the framework)

If you were to reword this, you could say that BERT uses transformers to encode representations of words on either side of a target word. At its base, the name means that BERT is a brand-new, never-been-accomplished-before, state-of-the-art natural language processing (NLP) algorithm framework. This type of structure adds a layer of machine learning to Google’s AI designed to understand human language better.

In other words, with this new update, Google’s AI algorithms can read sentences and queries with a higher level of human contextual understanding and common sense than ever before. While it doesn’t understand language at the same level that humans can, it still is a massive step forward for NLP into the linguistic understanding of machines.

What BERT is not

Google BERT doesn’t change how web pages are judged like previous algorithmic updates such as Penguin or Panda. It doesn’t rate pages as positive or negative.

Instead, it improves the search results in conversational search queries, so the results better match the intent behind them.

BERT history

BERT has been around longer than the BIG update that rolled out a few months ago. It’s been discussed in the natural learning processing (NLP) and machine learning (ML) community ever since October 2018, when the research paper BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding was published. Not long after, Google released a ground-breaking, open-sourced NLP framework based on the paper that the NLP community could use to research NLP and incorporate it into their projects.

Since then, there have been several new NLP frameworks based on or incorporating BERT, including Google and Toyota’s combined ALBERT, Facebook’s RoBERTa, Microsoft’s MT-DNN, and IBM’s BERT-mtl. The waves BERT caused in the NLP community account for most of the mentions on the Internet, but BERT mentions in the SEO world are gaining traction. This is because of BERT’s focus on the language in long-tail queries and on reading websites as humans would to provide better results for search queries.

How does BERT work?

Google BERT is a very complicated framework, and understanding it would take years of study into NLP theory and processes.

The SEO world doesn’t need to go so deep, but understanding what it’s doing and why is useful for understanding how it will affect search results from here on out. So, here’s how Google BERT works:

Google BERT explained

Here’s how BERT takes a look at the context of the sentence or search query as a whole:

- BERT takes a query

- Breaks it down word-by-word

- Looks at all the possible relationships between the words

- Builds a bidirectional map outlining the relationship between words in both directions

- Analyzes the contextual meanings behind the words when they are paired with each other.

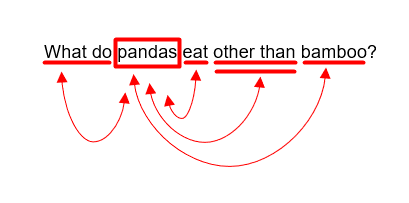

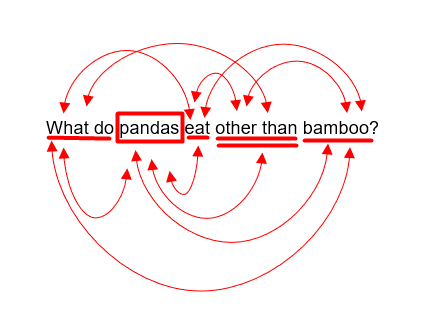

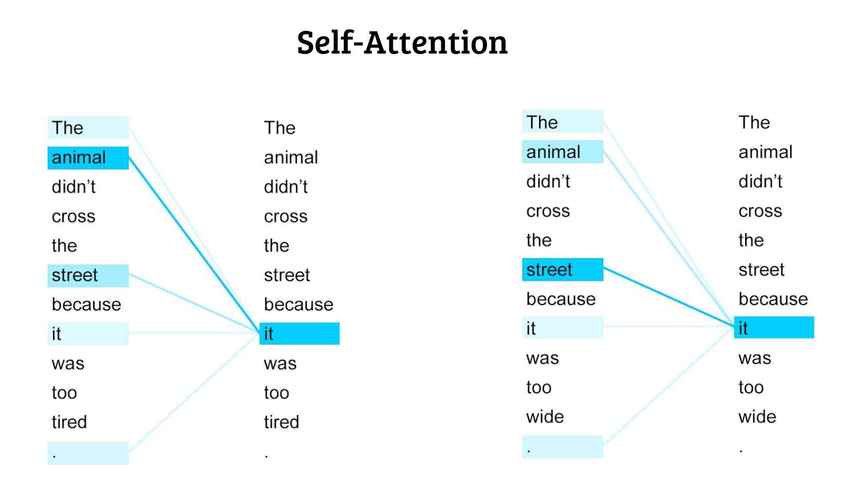

Okay, to better understand this, we’ll use this example:  Each line represents how the meaning of “panda” changes the meaning of other words in the sentence and vice versa. The relationships go both ways, so the arrows are double-headed. Granted, this is a very, very simple example of how BERT looks at context.

Each line represents how the meaning of “panda” changes the meaning of other words in the sentence and vice versa. The relationships go both ways, so the arrows are double-headed. Granted, this is a very, very simple example of how BERT looks at context.

This example examines only the relationships between our target word, “panda,” and the other meaningful segments of the sentence. BERT, however, analyzes the contextual relationships of all words in the sentence. This image might be a bit more accurate:

An analogy for BERT

BERT uses Encoders and Decoders to analyze the relationships between words.

Imagining how BERT functions as a translation process provides a pretty good example of how it works. You start with an input, whatever sentence you want to translate into another language. Let’s say you wanted to translate our panda sentence above from English into Korean.

BERT doesn’t understand English or Korean, though, so it uses Encoders to translate “What do pandas eat other than bamboo?” into a language it does understand. This language is one it builds for itself over the course of its analyzing language (this is where the encoder representations come in). BERT tags words according to their relative positions and importance to the meaning of the sentence.

It then maps them on an abstract vector, thus creating a sort of imaginary language. So, BERT converts our English sentence into its imaginary language, then converts the imaginary language into Korean using the Decoder. The process is great for translation, but it also improves the ability of any NLP model based on BERT to correctly parse out linguistic ambiguities such as:

- Pronoun reference

- Synonyms and homonyms

- Or words with multiple definitions such as “run”

BERT is pre-trained

BERT is pre-trained, meaning that it has a lot of learning under its belt. But one of the things that makes BERT different from previous NLP frameworks is that BERT was pre-trained on plain text. Other NLP frameworks required a database of words painstakingly tagged syntactically by linguists to make sense of words.

Linguists must mark each word in the database for part-of-speech. It’s a process that is rigorous and demanding and can spark long-winded heated debates among linguists. Part-of-speech can be tricky, especially when the part-of-speech changes due to other words in the sentence.

BERT does this itself, and it does it unsupervised, making it the first NLP framework ever in the world to do so. It was trained using Wikipedia. That’s more than 2.5 billion words!

BERT may not always be accurate, but the more databases it analyzes, the more its accuracy will increase.

BERT is bidirectional

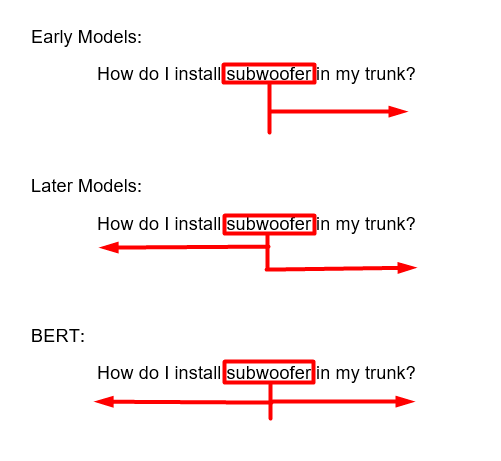

BERT encodes sentences bidirectionally. Put simply, BERT takes a target word in a sentence and looks at all the words surrounding it in either direction. BERT’s deeply bidirectional encoder is unique among NLP frameworks.

Earlier NLP frameworks such as OpenAI GPT encode sentences in only one direction, left-to-right in OpenAI GPT’s case. Later models like ELMo can train on both the left side and right side of a target word, but the models concatenate the encodings independently. This causes a contextual disconnect between each side of the target word.

BERT, on the other hand, identifies the context of all the words on either side of the target word, and it does it all simultaneously. This means it can fully see and understand how the meaning of words impacts the context of the sentence as a whole.  How words relate to each other (meaning how often they occur together) is what linguists call collocation.

How words relate to each other (meaning how often they occur together) is what linguists call collocation.

Collocates are words that often occur together — “Christmas” and “presents” are frequently found within a few words of each, for example. Being able to identify collocates helps determine the meaning of the word. In our example image earlier, “trunk” can have multiple meanings:

- the main woody stem of a tree

- the torso of a person or animal

- a large box for holding travel items

- the prehensile nose of an elephant

- the storage compartment of a vehicle.

The only way to determine the meaning of the word used in this sentence is to look at the surrounding collocates. “Subwoofer” often occurs with “car,” as does “trunk,” so the “vehicle storage compartment” definition is likely the right answer according to context. This is precisely what BERT does when it looks at a sentence.

It identifies the context of each word in a sentence by using the word’s collocates learned from its pre-training. If BERT read the sentence in one direction, there would be a chance to miss identifying the shared collocate of “car” between subwoofer and trunk. The ability to look at the sentence bidirectionally and as a whole solves this problem.

BERT uses transformers

BERT’s bidirectional encoding functions with transformers, which makes sense.

If you remember, the ‘T’ in BERT stands for transformers. Google identifies that BERT is a result of a breakthrough in their research on transformers. Google defines transformers as “models that process words in relation to all the other words in a sentence, rather than one-by-one in order.” Transformers use Encoders and Decoders to process the relationships between words in a sentence.

BERT takes each word of the sentence and gives it a representation of the word’s meaning. How strongly the meaning of each word is related to each other is represented by the saturation of the line. In the case of the image below, on the left side, ‘it’ is most strongly connected to “the” and “animal,” identifying in this context what “it” is referring to.

On the right, “it” is most strongly connected to “street.” Pronoun reference like this used to be one of the major issues that language models had trouble resolving, but BERT can do this.  Source If you’re an NLP enthusiast wondering about the nitty-gritty details behind what transformers are and how they work, you can check out this video which is based on the ground-breaking article: Attention Is All You Need. They’re a great video and an excellent paper (but in all honesty, it goes straight over my head).

Source If you’re an NLP enthusiast wondering about the nitty-gritty details behind what transformers are and how they work, you can check out this video which is based on the ground-breaking article: Attention Is All You Need. They’re a great video and an excellent paper (but in all honesty, it goes straight over my head).

For the rest of us muggles, the technological effects of the transformers behind BERT translates to an update where Google Search understands the context behind search results, aka user intent, better.

BERT uses a Masked Language Model (MLM)

BERT’s training includes predicting words in a sentence using Masked Language Modeling. What this does is mask 15% of the words in the sentence like this:

- What do [MASK] eat other than bamboo?

BERT then has to predict what the masked word is. This does two things: it trains BERT in word context, and it provides a means of measuring how much BERT is learning. The masked words prevent BERT from learning to copy and paste the input.

Other parameters such as shifting the decoder right, next sentence prediction, or answering contextual, sometimes unanswerable questions do so as well. The output BERT provides will show that BERT is learning and implementing its knowledge about word context.

What does BERT impact?

What does this all mean for search? Mapping out queries bidirectionally using transformers as BERT does is particularly important.

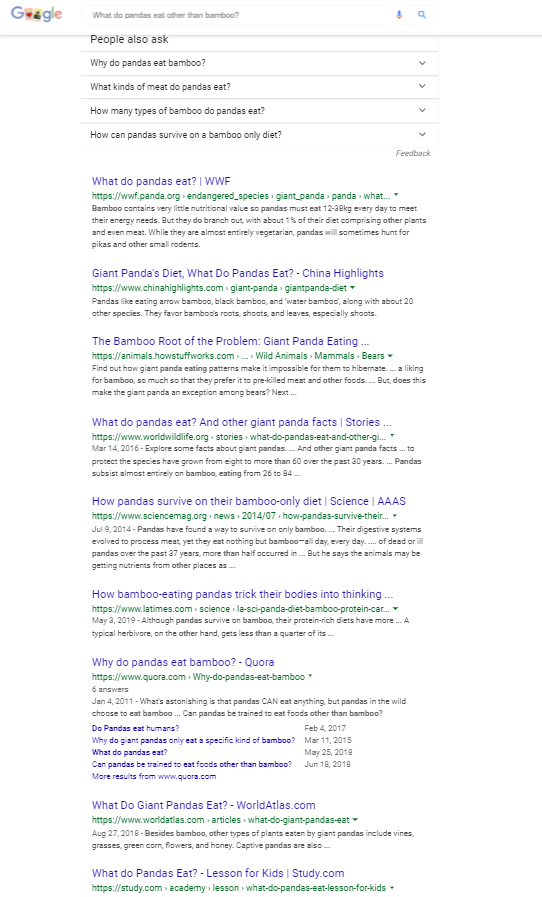

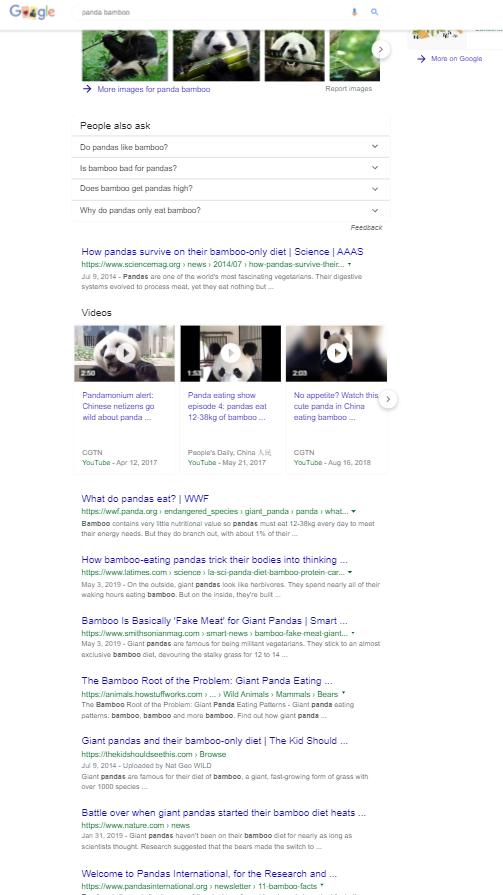

It means that the algorithms are taking into account the slight, but meaningful, nuances behind words like prepositions that could change the intent behind queries drastically. Take these two different search page results, for example. We’re continuing with our earlier pandas and bamboo theme.

The keywords are:

What do pandas eat other than bamboo

Panda bamboo

Notice how the results pages are very similar? Almost half the organic results are the same, and the People Also Ask (PAA) sections have some very similar questions. The search intent is very different, though.

Notice how the results pages are very similar? Almost half the organic results are the same, and the People Also Ask (PAA) sections have some very similar questions. The search intent is very different, though.

“Panda bamboo” is very broad, so the intent is hard to pin down, but it likely is wondering about a panda’s bamboo diet. The search page hits that pretty well. On the other hand, “what do pandas eat other than bamboo” is very specific in the search intent that the results on the search page miss completely.

The only results that came close to hitting the intent where possibly two of the PAA questions:

- What kind of meat do pandas eat?

- How can pandas survive on a bamboo-only diet?

And arguably two of the Quora questions, one of them being quite funny:

- Can pandas be trained to eat foods other than bamboo?

- Do pandas eat humans?

Slim pickings, indeed. In this search query, the words “other than” play a major role in the meaning of the search intent. Before the BERT update, Google’s algorithms would regularly ignore function/filler words like “other than” when returning information.

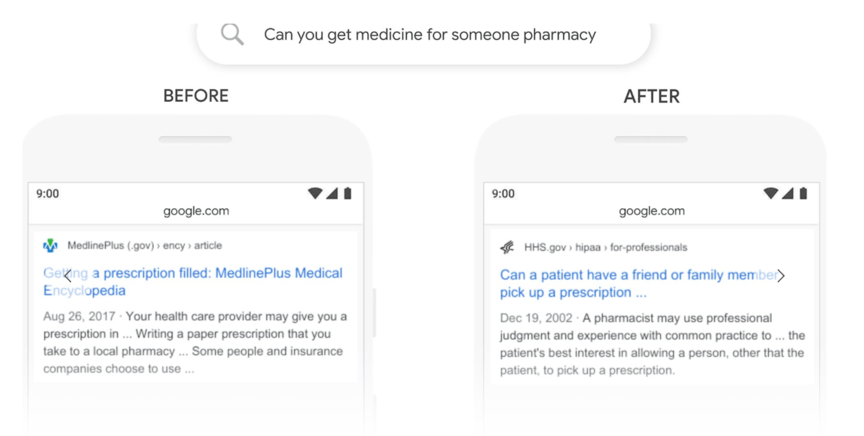

This resulted in search pages that failed to match the search intent like this one. Since BERT only affects 10% of search queries, it’s not too surprising that the left page hasn’t been affected by BERT at the time of writing this. This example that Google provides on their BERT explained page shows how BERT affects the search results:

Featured snippets

One of the most significant impacts BERT will have will be on featured snippets. Featured snippets are organic and rely on machine learning algorithms, and BERT fits the bill exactly.

Featured snippets results are pulled most often from the first search results page, but now there might be some exceptions. Because they’re organic, lots of factors can make them change, including new algorithm updates like BERT. With BERT, algorithms that affect featured snippets can better analyze the intent behind search queries and better match search results to them.

It’s also likely that BERT will be able to take the lengthy text of results, find the core concepts, and summarize the content as featured snippets.

International search

Since languages have similar underlying grammatical rules, BERT could increase the accuracy of translations. Every time BERT learns to translate a new language, it gains new language skills. These skills can transfer and help BERT to translate with higher accuracy languages it has never seen before.

How do I optimize my site for BERT?

Now we get to the big question: How to optimize for Google BERT?

Short answer? You can’t. BERT is an AI framework.

It learns with every new piece of information it gets. The rate at which it processes information and makes decisions means not even BERT’s developers can predict the choices BERT will make. Likely, BERT doesn’t even know why it makes the decisions it does.

If it doesn’t know, then SEOs can’t optimize for it directly. What you can do to rank in the search pages, though, is to continue to produce human-friendly content that fulfills search intent. BERT’s purpose is to help Google understand user intent, so optimizing for user intent will optimize for BERT.

- So, do what you’ve been doing.

- Research your target keywords.

- Focus on the users and generate content that they want to see.

Ultimately, when you write content, ask yourself:

- Can my readers find what they are looking for in my content?

Get your site BERT-ready with WebFX!

How can WebFX get your site perfect for Google BERT? WebFX is a full-service digital marketing company. We’ve been in the optimizing business for over 28 years.

With over 500 professional content writing specialists, we’ll get your website on track with content that matches the search intent of any keyword you could want. We’re the best at SEO! Contact us online or call us at 888-601-5359 to learn more!

-

Trevin serves as the VP of Marketing at WebFX. He has worked on over 450 marketing campaigns and has been building websites for over 25 years. His work has been featured by Search Engine Land, USA Today, Fast Company and Inc.

Trevin serves as the VP of Marketing at WebFX. He has worked on over 450 marketing campaigns and has been building websites for over 25 years. His work has been featured by Search Engine Land, USA Today, Fast Company and Inc. -

WebFX is a full-service marketing agency with 1,100+ client reviews and a 4.9-star rating on Clutch! Find out how our expert team and revenue-accelerating tech can drive results for you! Learn more

The Internet in Real Time

Ever wonder how much is going on at once on the Internet? It can be tough to wrap your mind around it, but we’ve put together a nice visual that’ll help! The numbers show no sign of slowing down either.

Find out More

Ready to Drive Results for Your Business?

See how WebFX uses SEO, PPC, Social Media, and Web Design to Drive Revenue for Businesses.

Get InspiredThe Internet in Real Time

Ever wonder how much is going on at once on the Internet? It can be tough to wrap your mind around it, but we’ve put together a nice visual that’ll help! The numbers show no sign of slowing down either.

Find out More