-

Published: Oct 17, 2023

Published: Oct 17, 2023

-

9 min. read

9 min. read

-

Maria Carpena

Maria Carpena Emerging Trends & Research Writer

Emerging Trends & Research Writer

- Maria is an experienced marketing professional in both B2C and B2B spaces. She’s earned certifications in inbound marketing, content marketing, Google Analytics, and PR. Her favorite topics include digital marketing, social media, and AI. When she’s not immersed in digital marketing and writing, she’s running, swimming, biking, or playing with her dogs.

Today, businesses have access to an abundance of data that fuels their marketing strategies. It’s no longer just business intelligence or data teams that must be well-versed in data terms.

To align different teams on the basic terms, here’s a data glossary to help other team members understand and appreciate data:

Want to get the latest intel about data and digital marketing? Subscribe to our free newsletter to get up-to-date information delivered to your inbox. You can also reach us at 888-601-5359 to speak to a strategist!

Don’t miss our Marketing Manager Insider emails!

Join 200,000 smart marketers and get the month’s hottest marketing news and insights delivered straight to your inbox!

Enter your email below:

Inline Subscription Form – CTA 72

“*” indicates required fields

(Don’t worry, we’ll never share your information!)

Data glossary: A to C

A

A/B testing

A/B testing is a form of hypothesis testing in which one variant is a control, and the other is a test to determine which variant performs better based on a particular metric. Also called split testing, A/B testing is widely used in digital marketing for experimenting with and finding better-performing landing pages, email marketing subject lines, and marketing messages.

API

An application programming interface or API is a set of rules and protocols that detail how different apps can talk to each other.

B

Batch processing

Batch processing is the process of running high-volume, processor-intensive, and repetitive data tasks that can be done without manual intervention. Batch processing is often scheduled to run during off-peak times.

Big data

Big data is a term that refers to large and complex amounts of data that require more powerful methods of processing. Big data helps businesses make data-backed decisions.

Business analytics

Business analytics is the process of using historical data to predict future trends, outcomes, or business performance in the future. It is a subset of business intelligence.

Business intelligence

Business intelligence is the process of collecting, storing, and analyzing business operations’ data to make informed decisions.

C

Customer data onboarding

Customer data onboarding is the process of loading customer data from an outside source — online or offline — into a specific system. Customer data onboarding is an important first step before a customer can start using a product.

Customer data platform

A customer data platform (CDP) is a type of software that collects, consolidates, and stores customer data from various sources to create unified customer profiles.

Glossary of data terms: D to E

D

Dashboard

A dashboard is a visualization tool that shows real-time or historical data to track different metrics, key performance indicators (KPIs), or conditions to better understand business performance or the competitive landscape.

Data analytics

Data analytics is the process of collecting, analyzing, and organizing raw data to spot trends and better understand a business’s processes and strategies.

Data architecture

A data architecture is the flow of data in an organization — from data gathering and transformation to consumption. A data architecture aims to address business needs by creating data and system requirements that manage data flow within the business.

Data augmentation

Data augmentation is a tactic that increases the amount of training data by creating modified copies of existing data.

Data capture

Data capture is the process of gathering data and converting it into a format that a computer can read.

Data catalogue

A data catalogue is a detailed inventory of an organization’s data assets. A data catalogue uses metadata so that team members can find and access data. It provides details on a data asset’s:

- Content descriptions

- Owner

- Lineage or where the data comes from

- Frequency of updates

- How the data can be used

Data center

A data center is an organization’s physical facility that houses its networked computer servers for its operations.

Data cleansing

Data cleansing is the process of preparing data for analysis. Also called data cleaning, data cleansing involves identifying incorrect, incomplete, duplicate, improperly formatted, and erroneous data, and taking the necessary action or either removing or correcting data.

Data confidentiality

Data confidentiality is a set of rules that protects data. It prevents unauthorized data access by setting restrictions.

Data curation

Data curation is organizing and integrating data collected from different sources to ensure they’re accurate and relevant.

Data engineer

A data engineer is a professional who builds and maintains data infrastructures. A data engineer works closely with data scientists to maintain data pipelines and data storage solutions.

Data enrichment

Data enrichment is the process of enhancing or refining an organization’s first-party data with relevant third-party data for a more comprehensive data set that is useful for the business.

Data extraction

Data extraction is the process of collecting data from various sources for further processing, analysis, or storage.

Data governance

Data governance is an organization’s framework defining the processes, rules, and responsibilities for effective data handling to ensure privacy and security.

Data health

Data health refers to how well an organization’s data supports its business objectives.

Data hygiene

Data hygiene refers to an organization’s processes to ensure its data is error-free and clean.

Data ingestion

Data ingestion is the process of transporting data from various sources to a centralized database, where it can be accessed and analyzed.

Data insights

Data insight is a key finding from data analysis. Businesses can use data insights to inform their strategies.

Data integration

Data integration is the process of consolidating data from various sources to create a unified view.

Data integrity

Data integrity is the accuracy, consistency, security, and safety regulatory compliance of an organization’s data.

Data interoperability

Data interoperability is the ability of systems and software to use diverse datasets from different formats and locations.

Data lake

A data lake is a centralized storage of raw data. It’s a low-cost data storage solution that organizations can use to collect high volumes of data in their original format.

Data lineage

Data lineage is a record of where data originated, and where it went before its final storage.

Data literacy

Data literacy refers to an organization’s ability to understand, create, and communicate with data.

Data mapping

Data mapping is the process of matching data fields from one source to another. It is an essential first step before data migration and other data management tasks.

Data masking

Data masking is a data security tactic that replaces sensitive data with anonymized data to protect private information. This technique is typically used in training or testing when actual data is not needed.

Data mesh

A data mesh is a decentralized data architecture that enables autonomy and data decentralization. As a result, each team within an organization can manage its own data services and APIs without affecting other teams’ data.

Data mining

Data mining is the process of discovering patterns and correlations in large datasets. Using machine learning, data mining turns raw data into valuable insights.

Data modeling

Data modeling is the process of creating visual representations of data elements and their relationships.

Data onboarding

Data onboarding refers to the process of transferring data into an app or system.

Data orchestration

Data orchestration is the process of gathering data from different locations and organizing them into a consistent format for analysis.

Data privacy

Data privacy refers to properly handling sensitive, personal, and confidential data, to meet data protection regulation requirements. It involves getting the data owner’s consent for data collection, notifying them how their data will be used, and complying with data protection regulations.

Data science

Data science is a field that combines math, statistics, analytics, and machine learning to draw out insights from structured and unstructured data, and inform an organization’s decisions and strategies.

Data scientist

A data scientist is a professional who uses tools to collect, analyze, and interpret data to help an organization solve problems with data-backed solutions.

Data scrubbing

Data scrubbing is the process of modifying or removing incomplete, inaccurate, outdated, duplicate, or incorrectly formatted data from a database.

Data security

Data security is the practice of protecting an organization’s data from unauthorized access or corruption throughout its lifecycle. It involves:

- Protection of the hardware and storage devices

- Data access regulation

- Securing the software apps used for data

Data stack

A data stack is a suite of tools that an organization uses for data loading, storage, transformation, and analysis.

Data transformation

Data transformation is the process of converting data from one format or structure to another. It is an essential step in data integration and management tasks.

Data validation

Data validation is a process that tests the accuracy and quality of data against a set of criteria before it is processed.

Data visualization

Data visualization is the process of creating charts, graphs, maps, and other visual aids like animations to make data easier to understand. Data visualization also makes it easier to spot trends and patterns in data.

Data warehouse

A data warehouse is an organized repository for a business’s structured and filtered data.

Data wrangling

Data wrangling is transforming data from one raw format into a different format to make it easier to access and analyze.

Database

A database is an organized collection of structured data stored in a computer system.

E

ELT

ELT stands for extract, load, and transform. ELT is a data integration process in which data is extracted from a source and loaded into a repository like a data warehouse without any transformation.

ETL

ETL stands for extract, transform, and load. ETL is the process of collecting and combining data from different sources into a repository like a data warehouse. ETL cleans and organizes raw data before storing it.

Data science terms: F to U

F

First-party data

First-party data is data that organizations directly collect from their audience or customers. First-party data offers valuable insights to businesses like yours and enables you to implement personalized marketing strategies.

M

Metadata

Metadata is data that describes a data set. For example, a document’s metadata can include its subject, creation date, and document type.

R

Raw data

Raw data is a set of data that is collected from different sources and hasn’t been processed.

Reverse ETL

Reverse ETL is the process of moving data from a data warehouse into another system.

S

Structured data

Structured data refers to data that’s organized in a desired format before being stored so that users and machines can easily search and read them.

U

Unstructured data

Unstructured data refers to data that hasn’t been arranged in a predefined format.

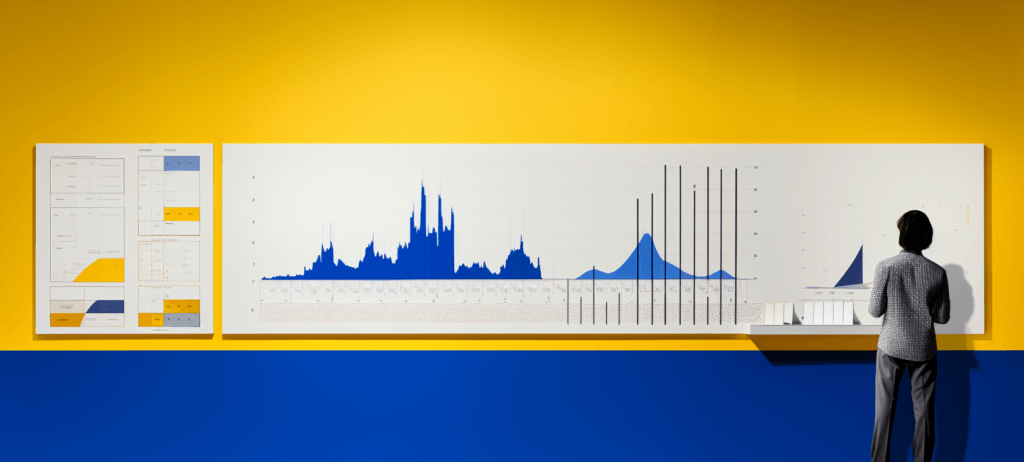

Measuring the metrics that affect your bottom line.

Are you interested in custom reporting that is specific to your unique business needs? Powered by MarketingCloudFX, WebFX creates custom reports based on the metrics that matter most to your company.

- Leads

- Transactions

- Calls

- Revenue

Turn your data into useful insights today

In today’s data-driven business world, it’s important to make the most of your data and turn them into useful insights to fuel your strategies.

If you need help with data management, consider teaming up with WebFX. A full-service digital marketing agency with over 28 years of experience, WebFX offers data management services to power your business strategies.

Our proprietary software, MarketingCloudFX, enables our clients to collect and store their data in one platform, thus improving their workflow. As a result, they have increased their marketing return on investment (ROI) by 20%!

Let’s work together. Call us at 888-601-5359 or contact us online to speak with a strategist!

-

Maria is an experienced marketing professional in both B2C and B2B spaces. She’s earned certifications in inbound marketing, content marketing, Google Analytics, and PR. Her favorite topics include digital marketing, social media, and AI. When she’s not immersed in digital marketing and writing, she’s running, swimming, biking, or playing with her dogs.

Maria is an experienced marketing professional in both B2C and B2B spaces. She’s earned certifications in inbound marketing, content marketing, Google Analytics, and PR. Her favorite topics include digital marketing, social media, and AI. When she’s not immersed in digital marketing and writing, she’s running, swimming, biking, or playing with her dogs. -

WebFX is a full-service marketing agency with 1,100+ client reviews and a 4.9-star rating on Clutch! Find out how our expert team and revenue-accelerating tech can drive results for you! Learn more

Try our free Marketing Calculator

Craft a tailored online marketing strategy! Utilize our free Internet marketing calculator for a custom plan based on your location, reach, timeframe, and budget.

Plan Your Marketing Budget

Maximize Your Marketing ROI

Claim your free eBook packed with proven strategies to boost your marketing efforts.

Get the GuideTry our free Marketing Calculator

Craft a tailored online marketing strategy! Utilize our free Internet marketing calculator for a custom plan based on your location, reach, timeframe, and budget.

Plan Your Marketing Budget